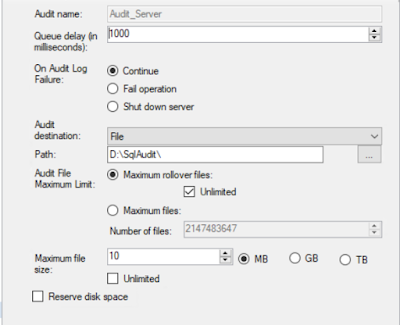

In on-premises SQL Server, the builtin retention policy of SQL Server Audit can only based on size and number of audit files. But almost all companies require to retain security log by days rather than log size. Below picture illustrates the builtin retention that provided by SQL Server.

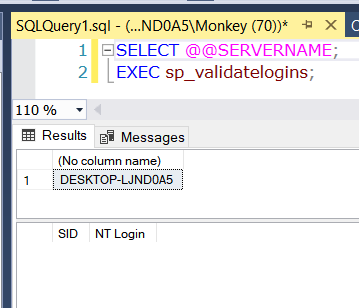

So how can you set the retention by days? It can only be done by creating a custom agent job to clear the old audit files. Below sql script is the creation script of that job:

USE [master]

GO

ALTER SERVER AUDIT [Audit_DB] WITH (STATE = OFF);

GO

ALTER SERVER AUDIT [Audit_Server] WITH (STATE = OFF);

GO

ALTER SERVER AUDIT [Audit_DB] TO FILE (MAX_ROLLOVER_FILES = 2147483647);

GO

ALTER SERVER AUDIT [Audit_Server] TO FILE (MAX_ROLLOVER_FILES = 2147483647);

GO

ALTER SERVER AUDIT [Audit_DB] WITH (STATE = ON);

GO

ALTER SERVER AUDIT [Audit_Server] WITH (STATE = ON);

GO

USE [msdb]

GO

EXEC msdb.dbo.sp_delete_job @job_name=N'Delete Old Audit Files', @delete_unused_schedule=1;

GO

/****** Object: Job [Delete Old Audit Files] Script Date: 23/11/2023 4:43:39 PM ******/

BEGIN TRANSACTION

DECLARE @ReturnCode INT

SELECT @ReturnCode = 0

/****** Object: JobCategory [Database Maintenance] Script Date: 23/11/2023 4:43:39 PM ******/

IF NOT EXISTS (SELECT name FROM msdb.dbo.syscategories WHERE name=N'Database Maintenance' AND category_class=1)

BEGIN

EXEC @ReturnCode = msdb.dbo.sp_add_category @class=N'JOB', @type=N'LOCAL', @name=N'Database Maintenance'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

END

DECLARE @jobId BINARY(16)

EXEC @ReturnCode = msdb.dbo.sp_add_job @job_name=N'Delete Old Audit Files',

@enabled=1,

@notify_level_eventlog=0,

@notify_level_email=0,

@notify_level_netsend=0,

@notify_level_page=0,

@delete_level=0,

@description=N'Delete Old Audit Files',

@category_name=N'Database Maintenance',

@owner_login_name=N'dbadmin', @job_id = @jobId OUTPUT

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

/****** Object: Step [1] Script Date: 23/11/2023 4:43:39 PM ******/

EXEC @ReturnCode = msdb.dbo.sp_add_jobstep @job_id=@jobId, @step_name=N'1',

@step_id=1,

@cmdexec_success_code=0,

@on_success_action=1,

@on_success_step_id=0,

@on_fail_action=2,

@on_fail_step_id=0,

@retry_attempts=0,

@retry_interval=0,

@os_run_priority=0, @subsystem=N'TSQL',

@command=N'EXEC sp_configure ''show advanced options'', 1;

RECONFIGURE;

EXEC sp_configure ''xp_cmdshell'', 1;

RECONFIGURE;

EXEC xp_cmdshell N''ForFiles /P "D:\SqlAudit" /C "cmd /c DEL @PATH" /D -365'';

EXEC sp_configure ''xp_cmdshell'', 0;

RECONFIGURE;

EXEC sp_configure ''show advanced options'', 0;

RECONFIGURE;',

@database_name=N'master',

@flags=0

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_update_job @job_id = @jobId, @start_step_id = 1

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobschedule @job_id=@jobId, @name=N'Delete Old Audit Files',

@enabled=1,

@freq_type=4,

@freq_interval=1,

@freq_subday_type=1,

@freq_subday_interval=0,

@freq_relative_interval=0,

@freq_recurrence_factor=0,

@active_start_date=20231123,

@active_end_date=99991231,

@active_start_time=0,

@active_end_time=235959,

@schedule_uid=N'6b104d29-a60c-43b5-b247-8fcb5c7916af'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

EXEC @ReturnCode = msdb.dbo.sp_add_jobserver @job_id = @jobId, @server_name = N'(local)'

IF (@@ERROR <> 0 OR @ReturnCode <> 0) GOTO QuitWithRollback

COMMIT TRANSACTION

GOTO EndSave

QuitWithRollback:

IF (@@TRANCOUNT > 0) ROLLBACK TRANSACTION

EndSave:

GO

The above script first set the number of audit files to unlimited. Then it create the agent job which deletes audit files older than 365 days, by using the forfiles and del windows commands. Beware that this agent job disables xp_cmdshell when it finished, so if your SQL Server uses xp_cmdshell in another part, you may need to change my job creation script.