This month blog post I would like to share a performance tuning

case. Actually I used to rely on missing index DMVs (I had a post

too) and get away from Database Engine Tuning Advisor (DTA) as DTA mostly give you too many indexes to add which will slow your database DML workload. But

recently I found there are a few cases that missing index suggestion

cannot give you the right index to add while DTA can, and these cases

are so obvious that even a developer just take a glance can make the index suggestion.

Let's take a look at the below experiment on StackOverflow2013

sample database:

USE StackOverflow2013

GO

SELECT MIN(CreationDate) FROM

Users;

SELECT MAX(CreationDate) FROM Users;

SELECT TOP 1

CreationDate FROM Users ORDER BY CreationDate;

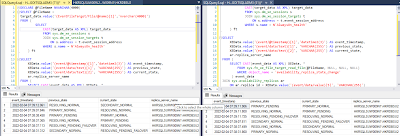

You can easily infer that an index on column CreationDate can

speed up these queries, but look at the actual execution plan, no any

missing indexes being suggested, seems SQL Server just happily to run

them by Cluster Index Scan:

You may say that because SQL Server thinks the table is too small,

so don't bother to consider index seek. But in fact the table has 2

millions rows, and the STATISTICS IO also shows that the scanning

operators are not cheap:

Table 'Users'. Scan count 1,

logical reads 44530,

physical reads 0, page server reads 0, read-ahead reads 0, page

server read-ahead reads 0, lob logical reads 0, lob physical reads 0,

lob page server reads 0, lob read-ahead reads 0, lob page server

read-ahead reads 0.

Table 'Users'. Scan count 1, logical

reads 44530, physical reads 0, page server

reads 0, read-ahead reads 0, page server read-ahead reads 0, lob

logical reads 0, lob physical reads 0, lob page server reads 0, lob

read-ahead reads 0, lob page server read-ahead reads 0.

Table

'Users'. Scan count 5, logical

reads 45184, physical reads 0, page server

reads 0, read-ahead reads 0, page server read-ahead reads 0, lob

logical reads 0, lob physical reads 0, lob page server reads 0, lob

read-ahead reads 0, lob page server read-ahead reads 0.

Table

'Users'. Scan count 5, logical

reads 45184, physical reads 0, page server

reads 0, read-ahead reads 0, page server read-ahead reads 0, lob

logical reads 0, lob physical reads 0, lob page server reads 0, lob

read-ahead reads 0, lob page server read-ahead reads 0.

Now let's try the same queries on DTA.

DTA can give you the good suggestion:

CREATE NONCLUSTERED INDEX

[_dta_index_Users_12_149575571__K4] ON [dbo].[Users]

(

[CreationDate] ASC

)WITH (SORT_IN_TEMPDB = OFF,

DROP_EXISTING = OFF, ONLINE = OFF) ON [PRIMARY]

Now let's add the new index suggested by DTA, then re-run the

workload queries in SSMS to check the actual execution plan and

statistics io:

Table 'Users'. Scan count 1, logical

reads 3, physical reads 0, page server reads 0, read-ahead

reads 0, page server read-ahead reads 0, lob logical reads 0, lob

physical reads 0, lob page server reads 0, lob read-ahead reads 0,

lob page server read-ahead reads 0.

Table 'Users'. Scan count 1,

logical reads 3, physical reads 0, page

server reads 0, read-ahead reads 0, page server read-ahead reads 0,

lob logical reads 0, lob physical reads 0, lob page server reads 0,

lob read-ahead reads 0, lob page server read-ahead reads 0.

Table

'Users'. Scan count 1, logical reads 3,

physical reads 0, page server reads 0, read-ahead reads 0, page

server read-ahead reads 0, lob logical reads 0, lob physical reads 0,

lob page server reads 0, lob read-ahead reads 0, lob page server

read-ahead reads 0.

Table 'Users'. Scan count 1, logical

reads 3, physical reads 0, page server reads 0, read-ahead

reads 0, page server read-ahead reads 0, lob logical reads 0, lob

physical reads 0, lob page server reads 0, lob read-ahead reads 0,

lob page server read-ahead reads 0.

As you can see, the new index suggested by DTA [_dta_index_XXX]

is being used, and the logical read is hugely reduced.

The conclusion is, rather than solely rely on missing index

suggestions, DTA should also be considered. We should compare both

results and merge the suggestions.

w

w